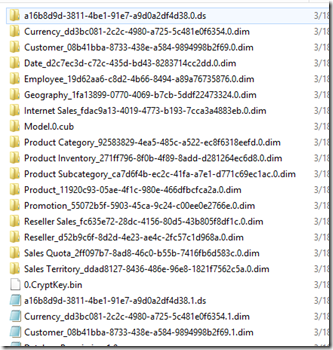

Some time ago, I blogged about using a date calculation dimension in a multidimensional cube, and how easy it makes doing time intelligence in a multidimensional model. 2014 seems to have started out as “the year of Power Pivot”, as it is definitely gaining traction with my clients and more and more of them are asking about it during engagements. One of the absolutely amazing things I get absolutely giddy about in Power Pivot is the concept of disconnected slicers. As Rob Collie best said, disconnected slicers in Power Pivot are pure magic. I particularly enjoy the “mad scientist” reference he makes. For those unfamiliar, a disconnected slicer is a piece of data that has absolutely nothing to relate to in your data, but you want to use it as a part of your analysis. For a great introduction, see Rob’s Power Pivot pro blog about it as an introduction. I was recently showing a client this mad scientist level stuff, but then took the natural progression and thought: “If I can do this to control the number of months back, can I take it a step further and create a date calculation dimension like in multidimensional too?” What, that wasn’t the first thing you thought too?

First off, let’s create some measures in Power Pivot that contain our measures and time intelligence analysis:

For this post, I’ve created 4: Total Sales, Calendar YTD Sales, Previous Period, and previous period diff. These are typical time intelligence functions, nothing overly special or different that I did on them. If you’re interested, these are the formulas for them:

Total Sales:=SUM([SalesAmount])

Calendar YTD:=TOTALYTD([Total Sales],’Date'[FullDateAlternateKey])

Calendar Previous Period:=CALCULATE([Total Sales],DATEADD(‘Date'[FullDateAlternateKey],-12,MONTH))

Calendar Previous Period Diff:=[Total Sales]-[Calendar Previous Period]

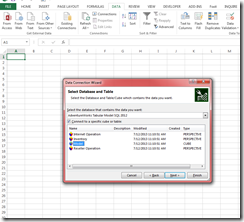

Not too bad, yet. Ok, let’s create a table to house our disconnected slicer. In Excel, create a table with the value you want to select and an “ID” column next to it:

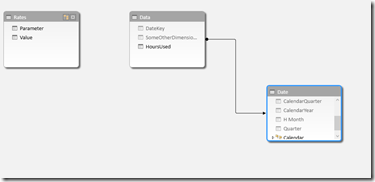

Then, add it in to your data model, either by copying and pasting or clicking the “Add to Data Model” link in the Power Pivot ribbon. Below is my Power Pivot diagram view after importing, notice how there is no relationship between the date calculation table and the fact table:

Getting back in to the data view, we’ll navigate back to the fact table, and take the same approach as Rob did for his disconnected slicer to return the currently selected slicer:

Date Calculation Selected:=MIN(‘Date Calculation'[Value])

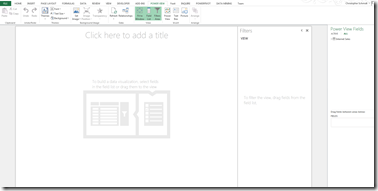

In the preceding formula, we’re taking the min value of the value column of the date calculation dimension. This allows us to then select the current active context inside of our pivot table. In the below image, I’m selecting the measure value from disconnected slicer table I created, and the Date Calculation selected measure from my fact table.

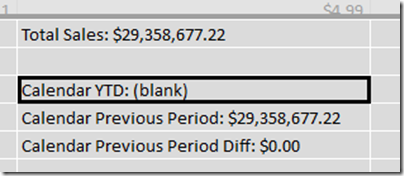

This is…AWESOME! However, when I go to drag on my time intelligence and performance measures, it doesn’t quite look right:

Whoa! That’s… not awesome. So let’s fix it. Back on the fact table, we can utilize a function called SWITCH, which works like a case statement in SQL. The syntax for switch starts with the column or value you want to evaluate, and then the cases afterwards, separated by commas. As an example, in this case, we want to swap in a different measure based on that [Date Calculation Selected] measure we created 2 paragraphs ago:

Active Measure:=SWITCH([Date Calculation Selected],1,[Total Sales],2,[Calendar YTD],3,[Calendar Previous Period],4,[Calendar Previous Period Diff])

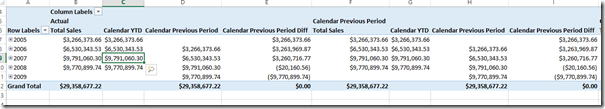

We’re evaluating the [Date Calculation Selected], and then returning a different measure based on what the active context is for that row. Let’s go back to our pivot table, take off all of those old values and replace that didn’t look so good above and replace them with our new “Active Measure” measure:

Oh. My. God. That is so AWESOME!!!! Even more amazing, we could even take our date calculation table and put it in a slicer above, freeing the columns section of the pivot for something else to make this even more flexible and put in on a dashboard. This way, we can actually have the MEASURES in a slicer!

That is some serious awesome-sauce! The complete workbook is available for download here.