A sometimes challenging business requirement is the concept of global transactions and sales. As a company, if you have sales in say, the United States, Canada, Germany, and Brazil, how do you report all of those sales together? There are 3 different ways the business could potentially want those reports. The first is that I want all of my reporting done in 1 currency that’s been standardized. Using the 4 countries above, let’s say that’s US Dollars. The second way I might want to view it is as stored as the native currency, and reporting is done in the native currency. The third is that everything is converted to one currency while it is stored and I want to report it as its native currency. Fortunately, with SSAS it’s easy to implement a solution that can provide the reporting around different currency types and SQL Server even as has a money type to make it easier to store this data. Each of the 3 different types is very similar in implementation from an Analysis Services wizard standpoint. As is pointed out in the book Expert Cube Development (by Marco Russo, Chris Webb, and Alberto Ferrari), currency conversion is a business decision, not a technological one. This means that the person determining the type of implementation (one-to-many, many-to-many, or many-to-one) should always be a business user. For the rest of this blog post I’ll be walking through implementing currency conversion in AS MD.

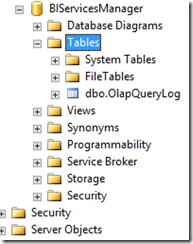

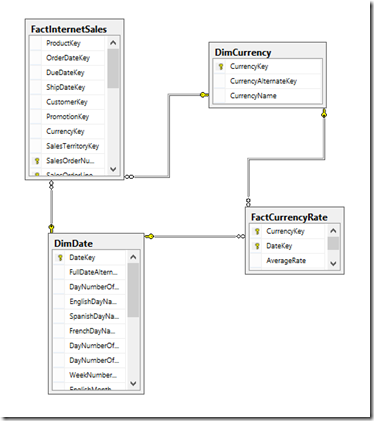

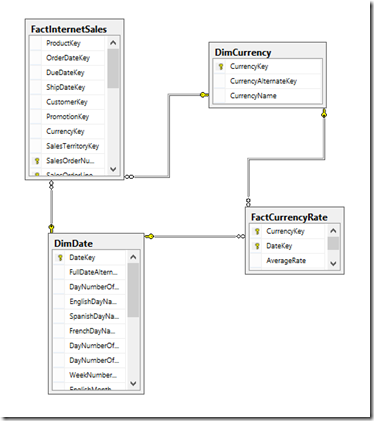

I am going to assume we are reporting off of a data model that is structured like the below, which lends itself well to currency conversion: (shamelessly lifted out of Adventure Works)

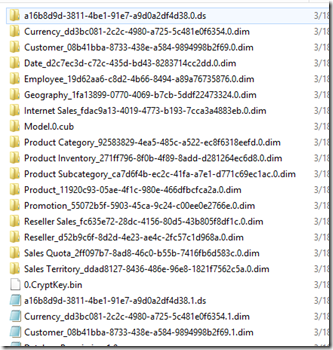

I’ve created my data source, my data source view, and 2 dimensions (currency and date). I’ve also created a small cube containing the sales amount from the FactInternetSales table and the FactCurrencyRates. In this model, we are collecting data in multiple currencies and want to convert it to a base currency of USD for reporting purposes. Let’s create our date dimension first:

Once you’ve created the attributes and hierarchies for the date dimension let’s tell AS MD that this is our date dimension, by clicking on the Add Business Intelligence wizard at the top left of the dimension designer and selecting Define Dimension Intelligence. On the next screen, select Time, then map the attributes of your date dimension to the corresponding attribute type.

Once that’s complete, create a currency dimension. The currency dimension should consist of at least the currency key and name. For more efficient storage on disk, for the key column, use a key column of the currency sk of the dimension and use a name column of the currency name. Since there is a one to one relationship between the 2 in the dimension, this will make it much faster for AS MD to run through the indexes at query time. My completed currency dimension looks like the below. Make sure you go into the Define Dimension Intelligence wizard again, but this time select currency, and map currency name to the currency attribute. Also in the currency dimension, select the currency attribute you created, and set the default member to your base currency (USD). The expression should look like [Currency].[Currency].&[100]. You always want to set the IsAggregatable property to false. For this dimension, you cannot create an ALL member, as summing across currencies doesn’t make any sense.

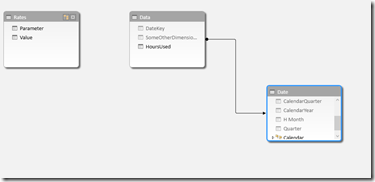

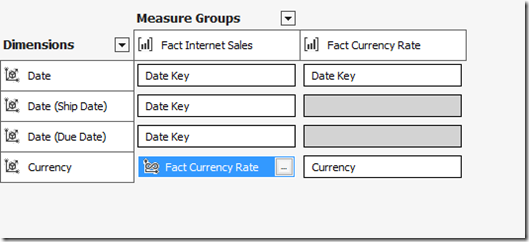

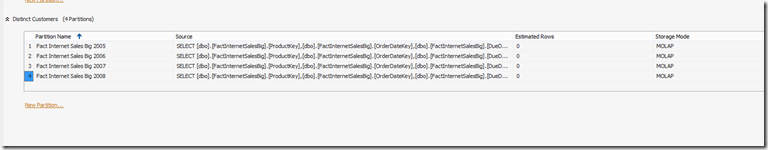

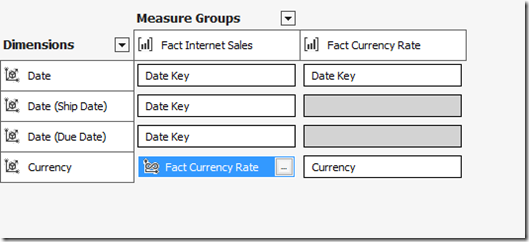

Now we’re ready to create a cube. Create the cube and select SUM for the Internet Sales In the cube, select the Internet Sales measure group and the currency rate conversion table, and change the currency rate measures for EndOfDayRate and AverageRate to LastNonEmpty. Then, move over to the dimension usage tab. ensure that the currency rate table is pointed to the date dimension, along with the date internet sales measure group you want to point to. My dimension usage screen looks like:

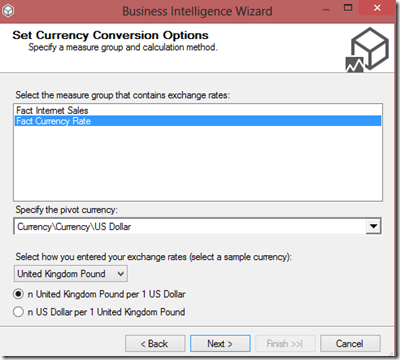

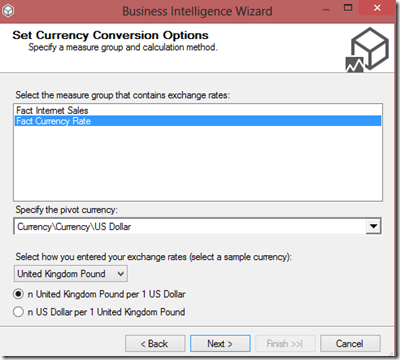

Now we’ll go back to the Cube structure, and add some currency conversion. In the Add business Intelligence icon at the top left, select Define Currency Conversion. On the next screen, we want to select our currency rate table, the currency we’re pivoting on (the one you want to be the reporting standard in a case of a many to one implementation), and whether it is in foreign currency per dollar or dollar per foreign currency.

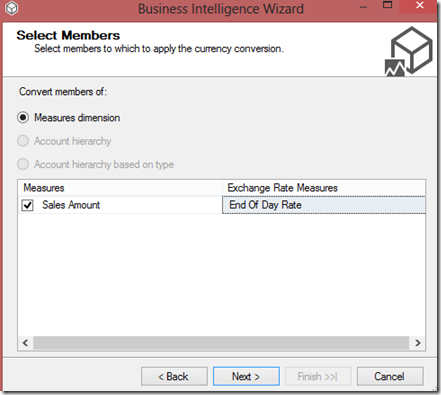

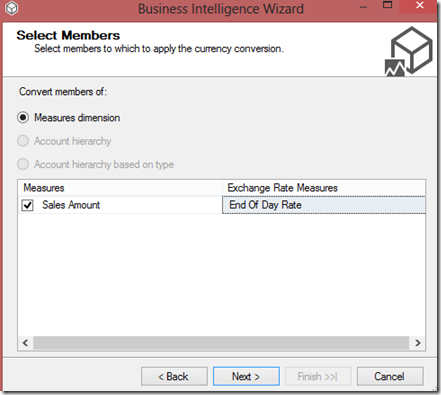

Click Next, and then select all of the measures that will be affected by the currency conversion:

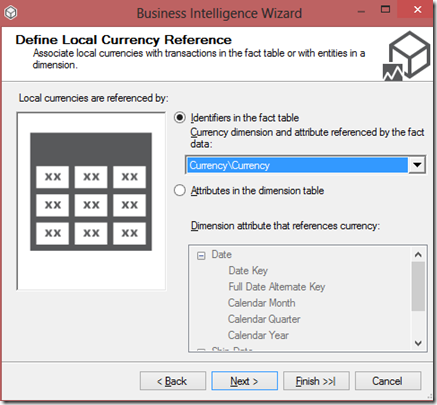

On the last screen, select whether the conversion is one-to-many, many-to-many, or many-to-one. As many-to-one is the most common type of currency conversion requested, we’re going to select that here and select Next >

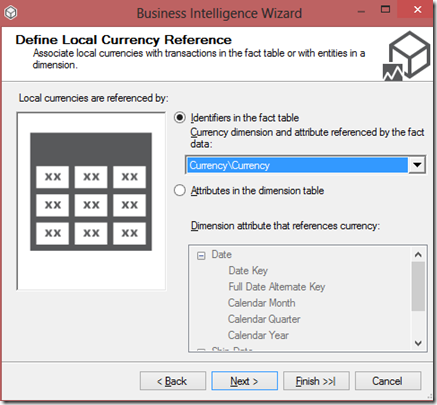

On the last screen, select the currency\currency dimension to specify that the fact table references the currency dimension directly, and select Finish

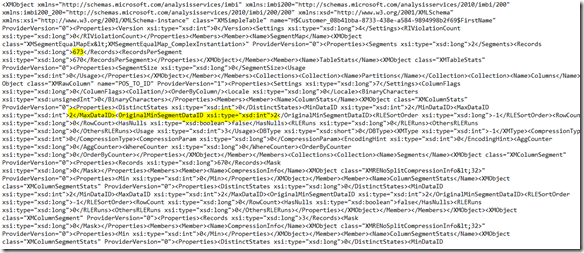

Click Finish again, and the wizard will run through. If you go to the calculations tab, you’ll see the script that the wizard generated:

// <Currency conversion>

// Currency conversion wizard generated script.

// Currency conversion type: ManyToOne

// Selected members to be converted: Sales Amount

// Please be aware that any changes that you decide to make to it may be overridden the next time you run the Currency Conversion wizard again.

// All currency conversion formulas are calculated for the pivot currency and at leaf of the time dimension

Scope ( { Measures.[Sales Amount]} );

Scope( Leaves([Date]) ,

[Reporting Currency].[US Dollar],

Leaves([Currency]));

// Convert Local value into Pivot currency for selected Measures that must be converted with Measure rate [End Of Day Rate]

Scope( { Measures.[Sales Amount]} );

This = [Reporting Currency].[Local] / Measures.[End Of Day Rate];

End Scope;

End Scope; // Leaves of time, source currency and pivot currency

End Scope; // Measures

// End of the currency conversion wizard generated script

// </Currency conversion>

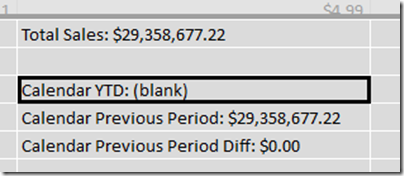

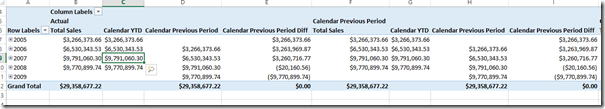

Also, if you go back to the Dimension Usage tab, you’ll see that the wizard has created a new dimension for the reporting currency. This is currency that the user will select to specify what currency it will be reported in. Process the cube, and we’re good to go!

Happy cubing!